-

- Author, Zoe Kleinman

- Role, BBC Technology Editor

- X,

It is said that as early as 2014, Mark Zuckerberg (also translated as Zuckerberg, Mark Zuckerberg) began building his huge estate called “Koolau Ranch” on the Hawaiian island of Kauai, covering an area of 1,400 acres.

According to Wired magazine, the estate will have a refuge facility with independent energy and food supplies. However, carpenters and electricians involved in the construction were required to sign confidentiality agreements.

The outer road is blocked from view by a six-foot-high wall.

When someone asked him last year if he was building a “doomsday bunker,” the Facebook founder said an emphatic “no.” The roughly 5,000-square-foot underground space is “like a little shelter, a basement,” he explained.

However, that didn’t stop speculation – especially when he bought 11 more properties in the Crescent Park neighborhood of Palo Alto, California, and built about 7,000 square feet of underground space underneath.

image source,Bloomberg via Getty Images

The New York Times pointed out that although the building permit said “basement”, some neighbors called it a “bunker” and even jokingly called it a “billionaire version of the Batcave.”

Other tech leaders appear to be making similar “disaster preparedness investments”—buying land, building underground facilities, and converting them into luxury bunkers.

LinkedIn co-founder Reid Hoffman once mentioned “apocalypse insurance”. He said that about half of the super-rich have this kind of “insurance”, and New Zealand is a popular refuge.

So are they really preparing for war, climate change, or some catastrophe we don’t yet know about?

image source,Getty Images News

The rapid development of artificial intelligence (AI) in recent years has made this uneasiness even deeper. Many people worry about the incredibly fast pace of technology advancement.

Ilya Sutskever, chief scientist and co-founder of Open AI, is reportedly one of them.

In mid-2023, San Francisco-based Open AI launched ChatGPT—a chatbot now used by hundreds of millions of people around the world—and the company is rapidly rolling out updated versions.

However, as journalist Karen Hao reveals in her new book, that summer Sutskofer became increasingly convinced that the computer science community was getting closer to achieving “artificial general intelligence” (AGI) – the moment when machines could rival human intelligence.

She wrote that Sutskover suggested at a meeting that an underground shelter should be built for the company’s top scientists in case something went wrong before the powerful technology became available.

image source,AFP via Getty Images

Sutskofer was widely reported to have said at the time: “Before artificial general intelligence is available, we will definitely build bunkers.” But who exactly “we” refers to is unclear.

This sentence reveals an intriguing phenomenon: many top computer scientists and technology industry leaders, on the one hand, are fully promoting the development of highly intelligent artificial intelligence, but on the other hand, they are also deeply afraid of the consequences it may ultimately bring.

So, if the so-called “artificial general intelligence” really will appear, when will it appear? Is it really possible that it would be so revolutionary that it would scare ordinary people?

‘Coming sooner than we thought’

Table of Contents

- 1. ‘Coming sooner than we thought’

- 2. Everyone has money to spend and doesn’t have to work?

- 3. Is this all unfounded nonsense?

- 4. intelligence without consciousness

- 5. Does the increasing focus on individual survival by billionaires detract from collective efforts to mitigate global risks like climate change and pandemics?

- 6. Are Tech Billionaires Bracing for Doomsday? insights on Their Preparations and Implications for Society

- 7. The Rise of “Prepper” Billionaires: A Growing Trend

- 8. Concrete Examples of Billionaire Preparations

- 9. The Types of Threats Driving Preparations: A Detailed Breakdown

- 10. The Implications for society: A Two-Tiered Future?

Many technology leaders have claimed that artificial general intelligence is coming. Altman, CEO of Open AI, said in December 2024 that artificial general intelligence will emerge “sooner than most people in the world imagine.”

DeepMind co-founder Sir Demis Hassabis predicts it will happen within the next five to 10 years. Anthropic founder Dario Amodei wrote an article last year that his preferred term is “powerful AI”, which may appear as early as 2026.

However, some are skeptical. Dame Wendy Hall, professor of computer science at the University of Southampton, said: “They are always moving the goalposts. It depends on who you ask.”

She added: “The scientific community believes that artificial intelligence technology is indeed amazing, but it is still far from true human intelligence.”

Babak Hodjat, chief technology officer of technology company Cognizant, agreed with Hall. He believes that there must be a series of “fundamental breakthroughs” before realizing artificial general intelligence.

He also pointed out that artificial general intelligence is unlikely to appear suddenly at any moment. Artificial intelligence is a rapidly evolving technology that is on a continuous journey, and countless companies around the world are racing to develop their own versions of AI.

One of the reasons people in Silicon Valley are particularly excited is that artificial general intelligence is seen as a prelude to a higher-order form – “artificial super intelligence” (ASI), that is, technology that surpasses human intelligence.

The concept of “the singularity” can be traced back to 1958, and is believed to have originated from the ideas of the Hungarian-born mathematician John von Neumann. It refers to the moment when computer intelligence develops beyond human understanding.

image source,Getty Images

Genesis, a 2024 book by Eric Schmidt, Craig Mundie, and the late Henry Kissinger, explores a technology so powerful that it is so effective at decision-making and leadership that humans may eventually hand over complete control to it.

They believe that it is not a question of “if it will happen” but “when”.

Everyone has money to spend and doesn’t have to work?

Proponents of the development of artificial general intelligence and artificial superintelligence preach their potential benefits with almost missionary zeal. They believe that this technology will be able to find new treatments for deadly diseases, solve climate change, and even create inexhaustible clean energy.

Elon Musk even claimed that super-intelligent AI may usher in a new era of “high income for all.”

He recently said that artificial intelligence will become extremely cheap and ubiquitous, and almost everyone will want to have their own “R2-D2 and C-3PO” (referring to the robots in “Star Wars”).

He said excitedly: “Everyone will have the best medical care, food, housing, transportation, and everything they need – a sustainable and prosperous life.”

Of course, there is a scary side to this. Will this technology be used by terrorists to become a devastating weapon? Or will it one day decide on its own that humans are the root of the world’s problems, and decide to destroy us?

image source,AFP via Getty Images

“If it’s smarter than you, then we have to control it,” warned Tim Berners-Lee, the inventor of the World Wide Web, in an interview with the BBC earlier this month.

“We have to make sure we have the ability to shut it down.”

Governments are also taking protective measures. The United States serves as the base for many leading AI companies. In 2023, then-President Biden signed an executive order requiring some companies to submit safety test results to the federal government. However, President Trump later reversed some of the provisions, calling the order a “hinder” to innovation.

At the same time, the British government established the AI Safety Institute (AI Safety Institute) two years ago, a government-funded research organization aimed at gaining a deeper understanding of the risks that high-level artificial intelligence may bring.

And the super-rich have their own “doomsday insurance” plans.

image source,Getty Images

LinkedIn’s Hoffman once joked: “Saying that you want to buy a house in New Zealand is actually a tacit code, and people who understand it will understand it.” Presumably the bunker has the same meaning.

However, a very human flaw is also exposed here.

I met a security guard who worked for a billionaire who owned a “bunker.” He told me that if a disaster really occurred in the world, their security team’s first priority would be to kill the boss and then enter the bunker themselves. Judging from his tone, he didn’t seem to be joking.

Is this all unfounded nonsense?

Neil Lawrence is Professor of Machine Learning at the University of Cambridge. To him, the entire discussion of artificial general intelligence is nonsense.

He said: “The concept of ‘artificial general intelligence’ is as ridiculous as talking about building an ‘artificial general vehicle’.”

“The appropriate mode of transportation depends on the situation. I fly to Kenya on an Airbus A350, drive to university and work every day, and walk to restaurants to eat… There is no one mode of transportation that can do it all at the same time.”

In his view, talk of artificial general intelligence is just a distraction.

“We’ve created technology that allows ordinary people to talk directly to a machine for the first time and make it do what humans want it to do. That’s extraordinary — it’s completely transformative.”

“But what is worrying is that we are too easily attracted by the artificial general intelligence story created by big technology companies, and ignore what we should really think about – how to make these technologies better serve mankind.”

image source,Getty Images

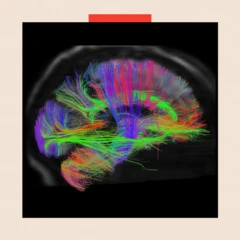

Existing AI tools are trained on massive amounts of data and are good at identifying patterns in it—whether it’s signs of tumors in medical images or predicting the next most likely word to appear in a sentence. However, no matter how lifelike their responses, AI still doesn’t have “feeling.”

“There are some ‘tricks’ to make a large language model (the technology underlying AI chatbots) behave as if it has a memory and can learn, but these are unsatisfactory and far inferior to humans,” said Cognizant’s Hojat.

Vince Lynch, CEO of California-based IV.AI, also has reservations about exaggerated claims about artificial general intelligence.

“It’s great marketing,” he said. “If you say you’re building the smartest thing ever, people are going to want to pay you.”

He added: “This is not something that can be ‘achieved in two years’. It requires a lot of computing power, a lot of human creativity, and countless iterations.”

image source,Getty Images

When asked if he believed that artificial general intelligence would one day be realized, he was silent for a long time and then said: “I really don’t know.”

intelligence without consciousness

In some ways, artificial intelligence has surpassed the human brain. Generative AI tools can make you an expert on medieval history one minute and solve complex mathematical equations the next.

Some tech companies admit they don’t always know why their products generate certain responses. Meta even said that its AI system is showing signs of self-improvement.

But at the end of the day, no matter how smart the machines become, the human brain still has the upper hand at the biological level – with about 86 billion neurons and 600 trillion synapses, it far exceeds the scale of artificial systems.

The human brain never needs to pause between interactions and is constantly adapting to new information.

“If you tell a person, ‘Life has been discovered on planets outside the solar system,’ they will immediately remember it and change their world view accordingly,” Hojat said. “But for a large language model, it will only ‘know’ this if you keep repeating that this is true.”

“Large-scale language models also don’t have ‘metacognition’, that is, they don’t really know what they know. Humans seem to have a self-examination ability, which is called ‘consciousness’, which allows us to be aware of what we know.”

This is the core of human intelligence—and something that currently cannot be replicated in laboratories.

Main image source: The Washington Post via Getty Images/ Getty Images MASTER. The first image shows Mark Zuckerberg and a schematic photo of a bunker in an unknown location.

Does the increasing focus on individual survival by billionaires detract from collective efforts to mitigate global risks like climate change and pandemics?

Are Tech Billionaires Bracing for Doomsday? insights on Their Preparations and Implications for Society

The Rise of “Prepper” Billionaires: A Growing Trend

Over the past decade, a noticeable trend has emerged: prominent figures in the tech industry are increasingly investing in preparations for catastrophic events.This isn’t about simple emergency kits; we’re talking about substantial, long-term investments in survival infrastructure, often referred to as “doomsday prepping.” The motivations range from concerns about global pandemics,climate change,geopolitical instability,and even artificial intelligence risks. This surge in preparedness raises critical questions about their perceptions of future threats and the implications for the rest of society.

Concrete Examples of Billionaire Preparations

Several high-profile tech billionaires have made their preparations public, or details have emerged through reporting. These aren’t rumors; they’re documented investments:

* elon Musk: Musk’s stated goal of colonizing Mars is often framed as a “backup plan for humanity.” Beyond space exploration, he’s invested in Neuralink, aiming to merge human brains with AI, possibly as a safeguard against existential threats.His focus on long-term survival and species resilience is a key indicator.

* Peter Thiel: The PayPal co-founder has funded research into longevity and has openly discussed the possibility of societal collapse. He’s invested in seasteading – the concept of creating autonomous, autonomous communities on the ocean – as a potential escape from terrestrial problems. His investments reflect a belief in alternative governance and escaping systemic risk.

* Sam Altman (OpenAI): Altman has spoken extensively about the potential dangers of advanced AI, including existential risks. While not explicitly “prepping” in the customary sense, his focus on AI safety and control can be seen as a form of preparation for a future where AI poses a notable threat. This highlights concerns about AI safety, existential risk, and technological disruption.

* Reid Hoffman (LinkedIn): Hoffman has been a significant donor to preparedness organizations and has personally invested in survival-focused ventures. He’s publicly discussed the importance of being prepared for a range of potential disasters. His involvement demonstrates a belief in community resilience and proactive disaster planning.

* Bunker Construction & Luxury Survival Estates: A growing industry caters specifically to the ultra-wealthy, offering underground bunkers, fortified estates, and private islands equipped to withstand various catastrophes. These aren’t just shelters; they’re self-sufficient ecosystems designed for long-term habitation.

The Types of Threats Driving Preparations: A Detailed Breakdown

The anxieties fueling this trend are multifaceted.Here’s a closer look at the primary concerns:

- Pandemics & Biological Warfare: The COVID-19 pandemic served as a stark reminder of the vulnerability of global systems to infectious diseases. billionaires are investing in advanced medical technologies, research into rapid vaccine advancement, and secure, isolated living environments. Pandemic preparedness and biosecurity are key areas of focus.

- Climate Change & Environmental Collapse: Extreme weather events, rising sea levels, and resource scarcity are driving concerns about societal disruption. Investments include enduring agriculture, water purification technologies, and relocation to more resilient geographic locations. Climate resilience, resource management, and sustainable living are central themes.

- Geopolitical Instability & Nuclear War: Escalating international tensions and the proliferation of nuclear weapons create a constant threat of large-scale conflict. Preparations include secure interaction systems, hardened shelters, and stockpiles of essential supplies. National security, conflict avoidance, and emergency communication are prioritized.

- artificial Intelligence Risks: the rapid advancement of AI raises concerns about job displacement, autonomous weapons systems, and the potential for AI to surpass human control. Investments focus on AI safety research, ethical AI development, and strategies for mitigating the risks of advanced AI. AI ethics, AI control, and technological unemployment are critical considerations.

- Economic Collapse & Systemic Failure: Concerns about financial instability, hyperinflation, and the potential collapse of global economic systems are also driving preparations. Investments include precious metals, alternative currencies, and self-sufficient economic models. Financial resilience, alternative economies, and decentralization are gaining traction.

The Implications for society: A Two-Tiered Future?

The billionaire “prepper” phenomenon raises profound ethical and societal questions.

* Exacerbating Inequality: The ability to prepare for doomsday scenarios is largely limited to the ultra-wealthy, creating a stark divide between those who can afford to protect themselves and those who cannot. This could lead to increased social unrest and instability in the event of a major catastrophe.

* Moral Obligations & Resource allocation: should billionaires be using their resources to address systemic problems that contribute to these threats (like climate change) rather than building escape hatches for themselves? This debate highlights the tension between individual self-preservation and collective obligation.

* The “fortress Mentality” & Social Fragmentation: The construction of isolated, self-sufficient communities could further fragment society and erode social cohesion.A “fortress mentality” could hinder cooperation and collective action in times of crisis.