Fake AI Chatbots Top Mac App Store Charts,Raising Privacy Concerns

Table of Contents

- 1. Fake AI Chatbots Top Mac App Store Charts,Raising Privacy Concerns

- 2. Imitation and Deception

- 3. The Risks of Data Collection

- 4. Staying Safe in the Age of AI Apps

- 5. Frequently Asked Questions

- 6. What are the specific security risks content creators face when using fraudulent ChatGPT clone apps?

- 7. Beware of Dubious ChatGPT Clones Reappearing in the app Store: A Security Alert for Content Creators

- 8. The Resurgence of Fake AI Apps

- 9. Why Content Creators Are Prime Targets

- 10. Identifying Fake ChatGPT apps: Red Flags to Watch For

- 11. Real-World Examples of ChatGPT Clone Scams

- 12. Protecting Your Data: Practical Steps for Content Creators

- 13. The Role of App Store Security & User Reporting

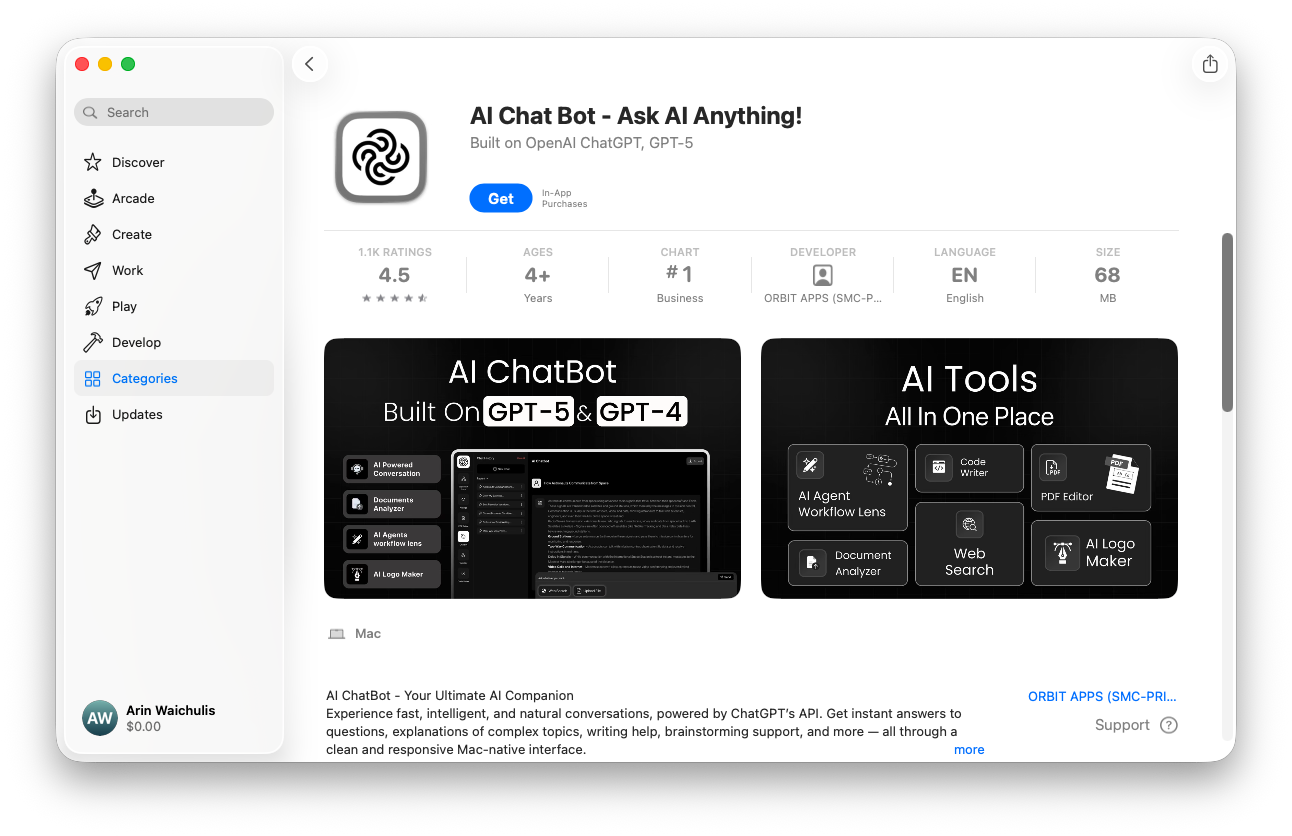

A wave of misleading Artificial Intelligence Chatbot applications has infiltrated the Mac App Store,prompting security alerts and renewed warnings about data privacy.Security Researcher Alex Kleber recently identified a top-ranked Business request on the platform that blatantly impersonates OpenAI’s branding, raising concerns about potential data harvesting and misuse.

The surge of these deceptive apps follows a period of rapid growth in AI-powered tools, reminiscent of two years ago when OpenAI’s GPT-4 API fueled a proliferation of similar offerings. While many of those earlier applications have faded, the latest instances demonstrate a continued vulnerability in app store security protocols.

Imitation and Deception

The identified application, positioned as a leading “AI ChatBot” for macOS, closely mimics OpenAI’s visual identity, functionality, and marketing materials. Investigations reveal the app shares a developer connection with another virtually identical application, deploying matching names, interfaces, screenshots, and even a shared support website linked to a generic Google page.

Both applications originate from a developer account associated with a company address in Pakistan. Despite Apple’s increased efforts to remove fraudulent and copycat apps-including preventing over $9 billion in fraudulent transactions in 2025-these deceptive programs successfully bypassed the review process and achieved prominent placement on the U.S.mac App Store.

Experts stress that app store approval and high rankings do not guarantee data security. A recent report by Private Internet Access (PIA) highlighted concerning clarity issues within many personal productivity apps. One instance saw an AI assistant secretly collecting more user data than its App Store listing indicated, gathering not only message content and device identifiers but also names, emails, and usage statistics.

The Risks of Data Collection

The collection of user data linked to personal identities presents notable risks. Sensitive conversations and personal facts could be vulnerable to exploitation, particularly if stored in poorly secured databases managed by untrustworthy entities. Concerns also arise from the possibility of data being sold to brokers or used for malicious purposes.

While Apple’s privacy labels provide some transparency, they rely on self-reporting by developers. The company currently lacks a robust verification system to ensure the accuracy of this information. This creates an opportunity for developers to misrepresent their data collection practices.

As AI technology continues to evolve, vigilance is crucial.Users should exercise extreme caution when downloading and using AI-powered applications, carefully reviewing privacy policies and understanding the potential risks to their personal data.

| Feature | legitimate Apps | Deceptive Apps |

|---|---|---|

| Data Collection | Obvious, limited to functionality | Opaque, excessive, perhaps misused |

| Branding | Original, consistent | Imitative, misleading |

| Developer Transparency | Verified identity, clear contact info | Obscured, potential shell companies |

Did You Know? Apple removed a significant number of fraudulent apps in 2025, preventing billions of dollars in potential losses, yet deceptive applications still slip through review.

Pro Tip: Always review an app’s privacy policy before downloading, and be wary of applications requesting excessive permissions.

Staying Safe in the Age of AI Apps

The incident underscores the importance of adopting proactive security measures. Regularly review app permissions on your devices,utilize strong and unique passwords,and be cautious about sharing personal information with unfamiliar applications. Consider using a reputable security suite to scan for malware and protect your privacy.

Staying informed about the latest security threats and best practices is also essential. Follow trusted security blogs, news sources, and advisories from organizations like the Federal trade Commission (FTC) and the Cybersecurity and Infrastructure Security Agency (CISA).

Frequently Asked Questions

- What are AI chatbot apps? AI chatbot apps are software applications that use artificial intelligence to simulate conversation with users.

- Why are fake AI chatbot apps a concern? They can collect and misuse personal data, leading to privacy breaches and potential identity theft.

- How can I identify a fake AI chatbot app? Look for inconsistencies in branding, vague privacy policies, and excessive permission requests.

- What steps can I take to protect my data? review app permissions, use strong passwords, and stay informed about security threats.

- Does Apple verify the data collection practices of apps? Apple relies on self-reporting by developers and currently lacks a comprehensive verification system.

What are the specific security risks content creators face when using fraudulent ChatGPT clone apps?

Beware of Dubious ChatGPT Clones Reappearing in the app Store: A Security Alert for Content Creators

The Resurgence of Fake AI Apps

Over the past few months, we’ve seen a worrying trend: a critically important increase in fraudulent applications mimicking OpenAI’s ChatGPT appearing in app stores. These ChatGPT clones aren’t just harmless imitations; they pose a serious security risk to content creators and all users. As of late 2025, this issue is escalating, requiring immediate attention and preventative measures. The recent shutdown of virtual card platforms like Yeka (as highlighted in resources like https://github.com/chatgpt-helper-tech/chatgpt-plus-guide) has inadvertently created a vacuum,potentially driving users towards riskier,unofficial app sources.

Why Content Creators Are Prime Targets

Content creators – writers, marketers, designers, and anyone relying on AI writing tools – are especially vulnerable. Hear’s why:

* Data Sensitivity: You likely input sensitive project details, client information, and potentially proprietary ideas into these tools. Fake apps are designed to steal this data.

* Financial Information: many clones attempt to lure users with “free trials” that require credit card details, leading to unauthorized charges.

* Brand reputation: If a compromised account is used to generate and distribute content, it can damage your professional reputation.

* Workflow Disruption: Dealing with malware or data breaches considerably disrupts your creative process and productivity.

Identifying Fake ChatGPT apps: Red Flags to Watch For

Distinguishing legitimate apps from malicious copies requires vigilance. Here’s what to look for:

* App Name & Developer: Scrutinize the app name for slight misspellings or variations of “ChatGPT.” Verify the developer’s identity. OpenAI’s official apps are published under “OpenAI.”

* Download Numbers & Ratings: Be wary of apps with very few downloads or overwhelmingly negative reviews. While new apps can be legitimate,a lack of established trust is a warning sign.

* Permissions Requested: Pay close attention to the permissions an app requests. Does a chatbot really need access to your contacts,camera,or microphone?

* In-App Purchases & Subscriptions: Aggressive or unusual in-app purchase prompts are a common tactic used by fraudulent apps.

* Poor Grammar & Spelling: Legitimate apps undergo rigorous quality control. Poorly written descriptions or in-app text are a strong indicator of a scam.

* Requests for Login Credentials: Never enter your OpenAI account credentials into a third-party app. Official integrations use API keys,not usernames and passwords.

Real-World Examples of ChatGPT Clone Scams

While specific, constantly evolving examples are difficult to track, patterns emerge.In early 2025, several apps claiming to offer “ChatGPT Pro” features where discovered to be malware, installing spyware on users’ devices. Another common scam involves apps offering “free ChatGPT access” in exchange for completing surveys, wich then harvest personal data. These incidents highlight the importance of staying informed and cautious.

Protecting Your Data: Practical Steps for Content Creators

Here’s how to safeguard your work and personal information:

- Download from Official Sources: Only download apps from the official Apple App Store or Google Play Store. Even then, exercise caution.

- Enable Two-Factor authentication (2FA): Protect your OpenAI account with 2FA for an extra layer of security.

- use Strong, Unique Passwords: Avoid reusing passwords across multiple platforms.

- Regularly Review App Permissions: Periodically check the permissions granted to apps on your devices.

- Install a Reputable Mobile security App: A good antivirus app can detect and remove malware.

- Be Skeptical of “Free” Offers: If something sounds too good to be true, it probably is.

- Report Suspicious Apps: If you encounter a suspicious app,report it to the app store promptly.

- Consider API Access: for developers and advanced users, accessing ChatGPT through the official API offers greater control and security.

The Role of App Store Security & User Reporting

Both Apple and Google are actively working to combat fraudulent apps. However, they rely heavily