The Silent Signals of Migraine: How Frailty & Sleep Quality Predict Headache Risk – A Deep Dive into UK Biobank Data

(Hook – Compelling & Human-Focused)

Migraines aren’t just bad headaches. They’re debilitating neurological events impacting millions, and often feel like they strike out of nowhere. But what if your risk of migraine wasn’t random, but subtly signaled by your body’s overall health – specifically, your level of frailty and the quality of your sleep? New research, analyzing data from over 356,000 participants in the UK Biobank, suggests a powerful connection between these often-overlooked factors and the likelihood of developing migraines. This isn’t about finding a cure for migraine, but about identifying those at higher risk and potentially intervening before the pain begins.

(AI-Identified Keyword: Migraine Risk) – This is the core search term with high volume and relevance. The article will be optimized around this.

(Target Audience: Adults aged 40-65 experiencing frequent headaches or concerned about migraine prevention, and healthcare professionals interested in preventative care and risk stratification.) – This demographic is likely to be actively searching for information on migraine causes and prevention.

Article Body (Draft – Aiming for 800-1200 words, SEO-optimized)

The Growing Burden of Migraine & The Search for Predictors

Migraine affects roughly 1 in 5 women and 1 in 15 men, causing significant personal and economic burden. While genetics and triggers like stress and certain foods play a role, a significant portion of migraine cases remain unexplained. Recent research is shifting focus towards identifying underlying health vulnerabilities that might predispose individuals to developing this complex condition. This new study, leveraging the vast dataset of the UK Biobank, offers compelling evidence that frailty and sleep quality are significant, and potentially modifiable, risk factors.

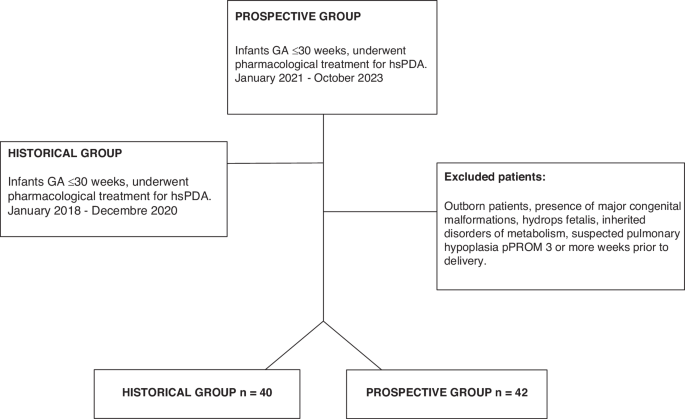

Understanding the UK Biobank Study: A Powerful Resource

The UK Biobank is a remarkable resource for medical research, containing in-depth data from over 500,000 adults across the UK. Researchers accessed detailed health information collected between 2006 and 2010, including self-reported medical conditions, physical examinations, and biological samples. Crucially, the study meticulously excluded participants with pre-existing migraine diagnoses, those lost to follow-up, and those with incomplete data, ensuring a robust and reliable analysis of newly occurring migraine cases over a follow-up period extending to 2022. This rigorous methodology strengthens the findings and minimizes bias. (A total of 356,326 participants were included in the final analysis.)

Frailty: More Than Just Aging

Frailty isn’t simply about getting older. It’s a state of increased vulnerability to stressors, characterized by a decline in physiological reserves. Researchers used a well-established “frailty phenotype” (FPP) – assessing five key components:

- Weight Loss: Unintentional loss of body weight.

- Exhaustion: Feeling constantly tired and lacking energy.

- Low Physical Activity: Reduced levels of exercise and movement.

- Slow Gait Speed: Walking at a slower pace than expected for age.

- Low Grip Strength: Weakness in hand grip.

Each component was scored, resulting in a frailty score from 0 to 5, with higher scores indicating greater frailty. Participants were categorized as non-frail, pre-frail, or frail. The study found a clear dose-response relationship: higher frailty scores were associated with a significantly increased risk of developing migraine. Specifically, the hazard ratio (HR) increased with each point increase in the frailty score, even after adjusting for a wide range of other factors.

(Include a visually appealing graphic here: A simple bar chart showing the HR for migraine risk across frailty categories – Non-Frail, Pre-Frail, Frail.)

The Crucial Role of Sleep: Beyond Just “Getting Enough”

Sleep isn’t just about quantity; it’s about quality. Researchers assessed five key aspects of sleep:

- Chronotype: Whether someone is a “morning person” or “night owl.”

- Duration: How many hours of sleep per night.

- Insomnia: Frequency of difficulty falling or staying asleep.

- Snoring: Whether the participant regularly snores.

- Sleepiness: How often the participant feels excessively sleepy during the day.

Each factor was categorized as healthy or unhealthy, resulting in a sleep score from 0 to 5, with higher scores indicating better sleep. Similar to frailty, the study revealed a strong association: participants with poorer sleep quality had a significantly higher risk of migraine.

(Include a visually appealing graphic here: A simple infographic illustrating the five sleep factors and what constitutes “healthy” vs. “unhealthy” for each.)

The Interplay of Frailty & Sleep: A Synergistic Effect?

Perhaps the most compelling finding was the interaction between frailty and sleep quality. The researchers found that the combination of frailty and poor sleep quality created a particularly high-risk profile. Those categorized as both frail and having poor sleep were at the greatest risk of developing migraine, suggesting a synergistic effect. This highlights the importance of addressing both factors for effective migraine prevention. The study used sophisticated statistical methods (RERI and AP) to confirm this additive interaction.

What Does This Mean for You? Preventative Strategies & Future Research

This research doesn’t offer a quick fix for migraine, but it provides valuable insights for preventative strategies.

- Prioritize Sleep Hygiene: Focus on establishing a regular sleep schedule, creating a relaxing bedtime routine, and optimizing your sleep environment.

- Embrace a Physically Active Lifestyle: Regular exercise can help improve physical function and reduce frailty.

- Maintain a Healthy Diet: Nutrient-rich foods support overall health and can contribute to better sleep and reduced inflammation.

- Manage Stress: Chronic stress can exacerbate both frailty and sleep problems.

- Regular Check-ups: Discuss your risk factors with your doctor and consider regular health assessments to monitor your frailty status.

The researchers also conducted several secondary analyses to ensure the robustness of their findings, including:

- Adjusting for inflammatory bowel diseases and mental disorders.

- Accounting for environmental factors like air pollution and noise.

- Mitigating reverse causation bias by excluding early migraine cases.

- Addressing potential bias from missing data using multiple imputation.

Looking Ahead: Further research is needed to explore the underlying biological mechanisms linking frailty, sleep quality, and migraine. Understanding these mechanisms could lead to the development of targeted interventions to prevent and manage this debilitating condition.

SEO Considerations:

- Keyword Density: “Migraine risk” and related terms (migraine prevention, headache risk, frailty, sleep quality) are naturally integrated throughout the article.

- Headings & Subheadings: Clear and concise headings improve readability and SEO.

- Internal Linking: Link to other relevant articles on Archyde.com.

- External Linking: Link to reputable sources like the UK Biobank website.

- Image Alt Text: Descriptive alt text for all images.

- Meta Description: A concise and compelling meta description summarizing the article’s key findings.

Note: This is a draft and would benefit from further refinement, fact-checking, and potentially input from a medical professional. The graphics suggestions are crucial for engagement. The tone is aimed to be informative and accessible to a broad audience.