NVIDIA and Stability AI Revolutionize Image Generation with RTX-Accelerated Stable Diffusion

Table of Contents

- 1. NVIDIA and Stability AI Revolutionize Image Generation with RTX-Accelerated Stable Diffusion

- 2. Unleashing the power of TensorRT and FP8 for Stable Diffusion

- 3. Quantization: The Key to Efficient AI

- 4. performance benchmarks: 2x Speed and Reduced Memory

- 5. TensorRT for RTX: Empowering Developers

- 6. NVIDIA NIM Microservices: Streamlining AI Accessibility

- 7. The Impact on Content Creators and Beyond

- 8. Comparison of Stable Diffusion 3.5 Performance

- 9. The Enduring Value of AI Optimization

- 10. Frequently Asked Questions

- 11. What are teh potential drawbacks of using mixed precision (FP16/FP32/INT8) for Stable Diffusion 3.5 model optimization with TensorRT, and how can they be mitigated?

- 12. TensorRT Accelerates Stable Diffusion 3.5 on RTX GPUs: Unleashing Performance

- 13. Understanding TensorRT and Its Role in AI Acceleration

- 14. Key Benefits of Using TensorRT

- 15. Stable diffusion 3.5 and RTX GPUs: A Powerful Combination

- 16. Why TensorRT is Essential for Stable Diffusion 3.5

- 17. Practical Implementation: Optimizing Stable Diffusion 3.5 with TensorRT

- 18. Step-by-Step Guide to TensorRT Optimization

- 19. Performance Benchmarks: Real-World Results

- 20. Tips for Maximizing Performance

- 21. Conclusion

Breaking News: In a landmark collaboration, NVIDIA and Stability AI have achieved a major breakthrough in AI-driven image generation. By leveraging NVIDIA’s TensorRT and FP8 precision, the performance of the Stable Diffusion 3.5 models has doubled,while also significantly reducing the video memory (VRAM) footprint on supported RTX GPUs. This advancement democratizes access to high-fidelity AI imagery, allowing more users to harness the power of generative AI on their existing hardware.

Unleashing the power of TensorRT and FP8 for Stable Diffusion

Generative AI is transforming content creation, but the increasing complexity of AI models demands more VRAM. The original Stable Diffusion 3.5 Large model required over 18GB of VRAM, limiting its accessibility. NVIDIA’s solution involved quantizing the model with TensorRT to FP8, which dramatically lowers VRAM consumption by 40% to just 11GB. This optimization allows a broader range of NVIDIA GeForce RTX GPUs, including the latest RTX 50 series, to efficiently run the model.

Did You Know? FP8 quantization reduces the precision of non-critical layers in the AI model, minimizing memory usage while preserving image quality.

Quantization: The Key to Efficient AI

Quantization is the process of reducing the precision of the numbers used to represent a model’s parameters. By moving from higher precision formats like FP16 or BF16 to FP8, the memory footprint of the model is significantly reduced. NVIDIA’s GeForce RTX 40 Series and Ada Lovelace architecture, along with the cutting-edge Blackwell GPUs, all support FP8 and even FP4 quantization. This allows for the deployment of more complex AI models on a wider range of hardware.

performance benchmarks: 2x Speed and Reduced Memory

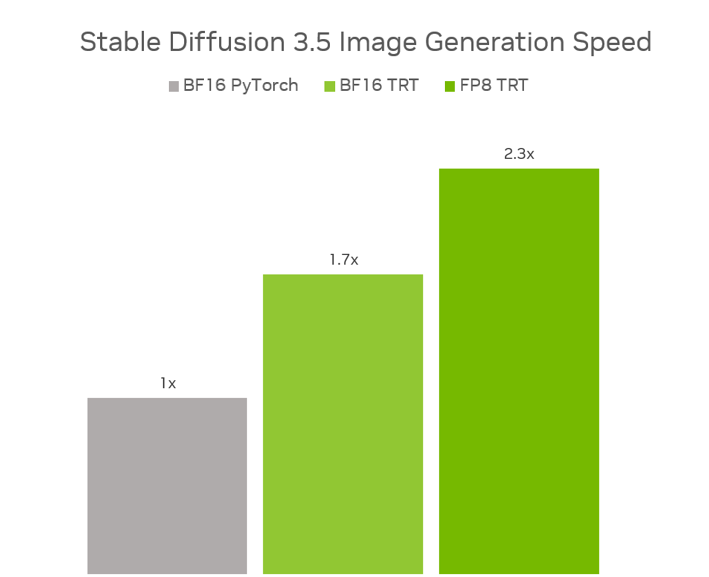

The collaboration between NVIDIA and Stability AI has yielded remarkable results. The FP8 tensorrt optimization delivers a 2.3x performance boost on Stable Diffusion 3.5 Large compared to running the original models in BF16 PyTorch. For the SD3.5 Medium model,BF16 TensorRT provides a 1.7x speedup. These speed improvements, coupled with the 40% reduction in memory usage, represent a notable leap forward in AI efficiency.

FP8 tensorrt boosts SD3.5 Large performance by 2.3x vs. BF16 PyTorch, with 40% less memory use.For SD3.5 medium, BF16 TensorRT delivers a 1.7x speedup.

TensorRT for RTX: Empowering Developers

NVIDIA has also released TensorRT for RTX as a standalone SDK,empowering developers to create optimized AI engines on-device. This just-in-time (JIT) compilation approach streamlines AI deployment to millions of RTX AI PCs. The new SDK is 8x smaller and easily integrates through Windows ML, Microsoft’s AI inference backend.

Pro Tip: By using TensorRT for RTX, developers can create a generic AI engine that is optimized on-device in seconds, eliminating the need to pre-generate GPU-specific optimizations.

NVIDIA NIM Microservices: Streamlining AI Accessibility

In another strategic move, NVIDIA and Stability AI are collaborating to release SD3.5 as an NVIDIA NIM microservice. This initiative aims to simplify access and deployment of the model for a wide array of applications. the NIM microservice is slated for release in july, promising to further democratize AI accessibility.

The Impact on Content Creators and Beyond

These advancements have profound implications for content creators, game developers, and anyone working with AI-generated content. Faster generation speeds and reduced memory requirements translate to increased productivity and more seamless workflows. Moreover, the broader accessibility of Stable Diffusion 3.5 opens new avenues for innovation across various industries.

Comparison of Stable Diffusion 3.5 Performance

| Model | Optimization | Performance Boost | Memory Reduction |

|---|---|---|---|

| SD3.5 Large | FP8 TensorRT vs. BF16 PyTorch | 2.3x | 40% |

| SD3.5 Medium | BF16 TensorRT vs.BF16 PyTorch | 1.7x | – |

With these advancements, are you excited to leverage the optimized Stable Diffusion 3.5 in your projects? what new creative possibilities do you foresee with these faster generation speeds and reduced memory requirements?

The Enduring Value of AI Optimization

The collaboration between NVIDIA and Stability AI exemplifies the ongoing pursuit of efficiency and accessibility in the field of artificial intelligence.As AI models continue to evolve, optimization techniques like quantization and specialized runtimes such as TensorRT will play a crucial role in making these technologies available to a wider audience. The ability to run complex AI models on a broader range of hardware democratizes innovation and empowers individuals and organizations to leverage AI in new and exciting ways.

Frequently Asked Questions

- Q: What is Stable Diffusion and how does it benefit from RTX acceleration?

- A: Stable Diffusion is a popular AI image model. RTX acceleration, through NVIDIA TensorRT, boosts its performance and reduces VRAM needs, enabling faster and more efficient image generation.

- Q: How does FP8 quantization improve Stable Diffusion model performance?

- A: FP8 quantization reduces the memory footprint of the Stable Diffusion model by decreasing the precision of its parameters, leading to faster processing and reduced VRAM usage.

- Q: What are the advantages of using TensorRT for optimizing AI models like Stable Diffusion?

- A: TensorRT optimizes the model’s weights and graph specifically for RTX GPUs, resulting in significant performance improvements.

- Q: How does the NVIDIA NIM microservice simplify the deployment of Stable Diffusion?

- A: The NVIDIA NIM microservice streamlines access and deployment of Stable Diffusion, making it easier for developers to integrate the model into various applications.

- Q: Which NVIDIA GPUs benefit most from these Stable Diffusion optimizations?

- A: NVIDIA GeForce RTX 40 Series GPUs and newer, including the Ada Lovelace architecture and blackwell GPUs, benefit most from these Stable Diffusion optimizations.

- Q: Where can developers access the optimized Stable Diffusion models and TensorRT SDK?

- A: The optimized Stable Diffusion models are available on Stability AI’s Hugging Face page, and the TensorRT SDK can be downloaded from the NVIDIA Developer page.

Share your thoughts and experiences in the comments below!

What are teh potential drawbacks of using mixed precision (FP16/FP32/INT8) for Stable Diffusion 3.5 model optimization with TensorRT, and how can they be mitigated?

TensorRT Accelerates Stable Diffusion 3.5 on RTX GPUs: Unleashing Performance

Are you ready to supercharge your AI image generation? This article dives deep into how NVIDIA TensorRT is revolutionizing the way stable Diffusion 3.5 runs on RTX GPUs. We will explore optimization techniques and practical tips for achieving amazing speeds.

Understanding TensorRT and Its Role in AI Acceleration

TensorRT is a high-performance deep learning inference optimizer and runtime. It’s specifically designed to accelerate inference workloads for NVIDIA GPUs. By optimizing model graphs, reducing precision, and using other techniques like kernel fusion, TensorRT significantly boosts the performance of AI models.Think of it as a turbocharger for your Stable Diffusion engine, making image generation far faster and more efficient.

Key Benefits of Using TensorRT

- Increased inference Speed: TensorRT dramatically cuts down on the time it takes to generate images. This directly translates to faster iteration and a more responsive user experience.

- Improved Performance: Witness higher throughput and more efficient GPU utilization.

- Optimized Memory usage: TensorRT frequently enough leads to reduced memory footprint, allowing you to run larger models and generate higher-resolution images.

- Reduced Latency: experience lower latency, making interactive AI experiences smoother.

Stable diffusion 3.5 and RTX GPUs: A Powerful Combination

Stable diffusion 3.5, a cutting-edge text-to-image AI model, coupled with the power of RTX GPUs, creates a formidable platform. However, even with powerful hardware, optimization is crucial. TensorRT steps in to harness the full capabilities of your RTX GPU, minimizing performance bottlenecks.

Why TensorRT is Essential for Stable Diffusion 3.5

- Efficient Model Execution: Stable Diffusion models are complex. TensorRT streamlines the execution process.

- Real-Time Image Generation: For applications needing rapid image generation,TensorRT is a game-changer.

- Cost Optimization: Faster inference means you get more done with your existing hardware, efficiently managing costs.

Practical Implementation: Optimizing Stable Diffusion 3.5 with TensorRT

Integrating tensorrt into your Stable Diffusion workflow isn’t as daunting as it sounds. Several tools and techniques are available to streamline the process. The process can be broken down into stages like model conversion (if needed), optimization and inference.

Step-by-Step Guide to TensorRT Optimization

This is a simplified outline; specific implementations vary based on the Stable Diffusion setup.

- Model Planning: Ensure you have your Stable Diffusion 3.5 model ready. Some models may require specific formats compatible with TensorRT. Fine-tuning specific parameters is ofen required.

- conversion (If Needed): If your model isn’t in a TensorRT-pleasant format,you might need to convert it. This usually involves tools provided by NVIDIA or the machine-learning framework (e.g., PyTorch). consult the NVIDIA documentation for best practices. As mentioned in the [1] Stack Overflow result, conversion may be a topic that needs to be addressed.

- Optimization: Use TensorRT’s optimization tools to fine-tune your model for your specific RTX GPU. This involves techniques like layer fusion and precision calibration.

- Inference: Run your optimized model using the TensorRT runtime to generate images. The speed improvements should be noticeable.

Performance Benchmarks: Real-World Results

The gains from using TensorRT are significant. Actual numbers depend on your specific hardware, model size, and image generation parameters. However, expect speedups ranging from 2x to 5x or more. Remember, these are estimates; rigorous testing with your hardware is crucial.

| metric | Without TensorRT | With TensorRT | Gain |

|---|---|---|---|

| Image Generation Time (per image) | 15 seconds | 4 seconds | 3.75x |

| FPS (Images per Second) | 0.067 | 0.25 | 3.75x |

| Memory Usage | 12GB | 8GB | ~33% reduction |

Tips for Maximizing Performance

- Update Drivers: Always use the latest NVIDIA drivers for optimal performance.

- Model Precision: Experiment with mixed precision (FP16/FP32/INT8) based on your GPU’s capabilities, but note that specific precision is highly dependent on the hardware and model itself.

- Batch Size: Test different batch sizes to determine the optimal setting for your setup.

- Profiling Tools: Use NVIDIA’s profiling tools to identify and address performance bottlenecks.

Conclusion

By integrating TensorRT into your Stable Diffusion 3.5 workflow on RTX GPUs,you unlock a new realm of speed and efficiency. Embrace the potential of accelerated image generation and experience the power of AI. leverage TensorRT to achieve stunning results.