“`html

Microsoft’s AI Chip Ambitions Face Setbacks: Braga Chip Delayed until 2026

Microsoft’s foray into custom artificial intelligence hardware has encountered a important roadblock. The next generation Maia chip, internally named Braga, is now projected to enter mass production in 2026, a minimum of six months behind the initial schedule. this delay casts a shadow over microsoft’s aspirations to directly challenge Nvidia’s stronghold in the artificial intelligence chip market and highlights the formidable technical and organizational challenges intrinsic to developing competitive silicon.

Braga Chip Delay: A Blow to Microsoft’s AI Strategy

Microsoft initiated its custom chip program to mitigate its ample reliance on Nvidia’s high-performance graphics processing units (gpus), which are the backbone of the majority of global artificial intelligence data centers. Similar to its cloud competitors Amazon and Google,Microsoft has made substantial investments in bespoke silicon engineered for artificial intelligence workloads. The postponement of the Braga chip’s production is a setback to this approach.

The delay implies that the Braga chip will likely be outpaced in performance by Nvidia’s Blackwell chips by the time it is indeed ready for deployment, thus exacerbating the performance disparity between the two tech giants.

Development Challenges and Setbacks

Numerous challenges have plagued the Braga chip’s development phase. Unforeseen design modifications, insufficient staffing, and significant employee turnover have consistently pushed back the project’s timeline.

One major setback occurred when OpenAI, a crucial microsoft partner, requested the incorporation of new features late in the development cycle. These late additions reportedly introduced instability during simulations, resulting in additional delays. Moreover, intense pressure to adhere to deadlines has fueled employee attrition, with certain teams experiencing a loss of as much as 20% of their workforce.

Microsoft’s Vertical Integration push

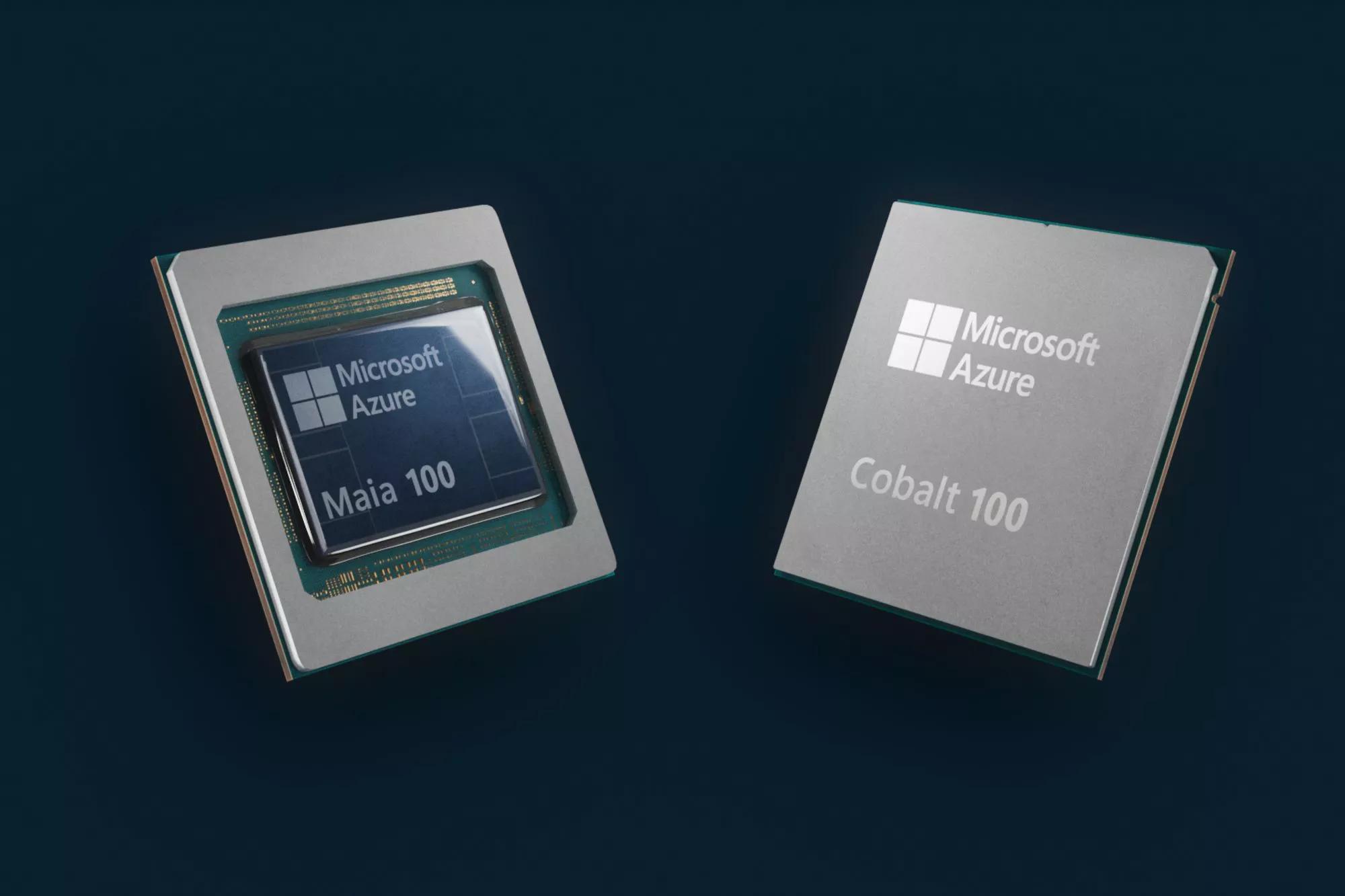

The Maia series, which includes the Braga chip, embodies Microsoft’s strategy to vertically integrate its artificial intelligence infrastructure. These chips are custom-designed to optimize Azure cloud workloads. Launched in late 2023, the Maia 100 leverages cutting-edge 5-nanometer technology and boasts tailored rack-level power management and liquid cooling solutions to effectively manage the significant thermal demands of artificial intelligence applications.

Did You Know? The 5-nanometer technology used in the Maia 100 refers to the size of the individual transistors on the chip, with smaller transistors generally leading to faster and more energy-efficient performance.

Focus on Inference

Microsoft has optimized these chips primarily for inference, rather than the more resource-intensive training phase. This design choice aligns with the company’s strategic vision of deploying them in data centers that power services such as Copilot and Azure OpenAI. However, the Maia 100 has seen limited deployment beyond internal testing environments becuase it was designed before the recent explosive growth in generative AI and large language models.

Pro Tip: Inference is the process of using a trained AI model to make predictions or decisions on new data. It’s less computationally intensive than training, making it ideal for real-time applications.

“What’s the point of building an ASIC if it’s not going to be better than the one you can buy?” – Nvidia CEO Jensen huang

— Archyde News (@Archyde) April 21,2023

Nvidia’s Competitive Edge

In contrast, Nvidia’s Blackwell chips, which began their rollout in late 2024, are engineered to handle both training and inference at a massive scale. These chips boast over 200 billion transistors and are built on a custom TSMC process, enabling exceptional speed and energy efficiency. This technological advantage has solidified Nvidia’s position as the premier supplier for artificial intelligence infrastructure globally.

Did You know? Blackwell chips are named after David Blackwell, a renowned statistician and mathematician, highlighting Nvidia’s focus on groundbreaking innovation.

The High Stakes of the AI Chip Race

The stakes involved in the artificial intelligence chip race are exceptionally high. The delay encountered by Microsoft means that Azure customers will remain dependent on nvidia hardware for a longer period, which coudl potentially lead to increased costs and restrict Microsoft’s capacity to differentiate its cloud service offerings. Meanwhile, Amazon and Google are making steady progress with their respective silicon designs. Amazon’s Trainium 3 and Google’s seventh-generation Tensor Processing Units are steadily gaining traction within data centers.

Nvidia appears to be unfazed by the increasing competition. Nvidia CEO Jensen huang has acknowledged that major technology companies are investing in custom artificial intelligence chips, but he has questioned the rationale behind such investments if Nvidia’s existing products already establish the benchmark for performance and efficiency.

What impact will this delay have on microsoft’s ability to compete in the AI cloud market? Will other companies be able to close the gap?

| Feature | Microsoft maia (Braga) | Nvidia Blackwell |

|---|---|---|

| Target use | Azure Cloud Inference | Training and inference |

| Production Timeline | 2026 (delayed) | Late 2024 (Ongoing) |

| Transistor Count | Not Publicly Disclosed | Over 200 Billion |

| Key Advantage | Customized for Azure | High Performance,Energy Efficiency |

The Future of AI chips

The race to develop superior artificial intelligence chips is only intensifying. As artificial intelligence models become more complex and demanding, the need for specialized hardware will continue to grow. Companies that can successfully design and manufacture these chips will have a significant advantage in the rapidly evolving artificial intelligence landscape. The investments from Microsoft,Amazon,and Google highlight the strategic importance of controlling this critical component of the artificial intelligence ecosystem.

Frequently Asked Questions

- why is Microsoft developing its own AI chips?

- What are the main challenges in developing AI chips?

- How does the delay of the Braga chip affect Microsoft’s AI strategy?

- What is the significance of Nvidia’s dominance in

What are the potential long-term consequences of Microsoft’s AI chip delays on their overall AI strategy and market share, considering the strong position of Nvidia?

Microsoft AI Chip Delays: Nvidia’s Dominance in the AI Chip Market

The artificial intelligence (AI) chip market is a rapidly evolving landscape, with tech giants vying for supremacy. Recent developments, notably Microsoft AI chip delays, have significantly impacted the competitive arena, creating opportunities for established players like Nvidia to further consolidate their market position. this article delves into the specifics of these delays, examines the implications for the industry, and explores Nvidia’s notable gains.

The Impact of Microsoft AI Chip Delays

Microsoft, traditionally a software powerhouse, has been investing heavily in its own AI chip development efforts. However, reports indicate that these efforts have faced setbacks, leading to Microsoft AI chip delays. These delays can stem from various factors, including complexities in chip design, manufacturing challenges, and supply chain bottlenecks. As a notable example,the windows 11 updates released in January 2025 – KB5050009 and KB5050021 – show that Microsoft continues its integration of AI models into its operating systems,which requires robust and efficient hardware support. (Source: Microsoft Support Forum) This underscores the need for powerful AI processing capabilities.

The repercussions of these delays are multi-fold:

- Increased Reliance on External Suppliers: Microsoft must continue relying on external suppliers, primarily Nvidia, for its AI hardware needs.

- Slower Innovation: Delays can hinder the pace of AI innovation within Microsoft’s ecosystem, preventing rapid deployment of new AI-powered features.

- Potential Market Share Loss: While Microsoft aims for greater independence in its AI infrastructure,these delays provide opportunities for competitors to forge ahead.

Nvidia’s Strategic Advantage: Capitalizing on the opportunity

While Microsoft navigates its AI chip design challenges, Nvidia remains a clear frontrunner in the AI chip market. Their graphic processing units (GPUs) and,specifically,their AI accelerators are widely considered the industry standard for deep learning and other AI applications. The strategic advantages Nvidia enjoys are substantial. Nvidia’s strong position is fueled by its continuous innovation, cutting-edge R&D, and robust software ecosystem.

Nvidia’s Market Share and Growth

nvidia’s market share in the AI chip market is substantial and continues to grow. This growth is fueled by:

- Robust Product Portfolio: Nvidia offers a comprehensive range of AI chips, catering to various needs, from data centers to edge devices.

- Strong Software Ecosystem: CUDA, Nvidia’s parallel computing platform and programming model, provides developers with powerful tools and libraries.

- Early Mover Advantage: Nvidia’s long-standing commitment to AI has solidified its leading position, with its AI hardware powering many of the world’s top AI projects.

Case Study: Expanding Nvidia’s Data Center Presence

Major cloud service providers and other datacenter operators are increasingly investing in Nvidia’s AI chips. The case of one major cloud provider in 2024/2025 demonstrates this.The company invested a significant amount of capital to upgrade its infrastructure to accelerate its AI model training and inference capabilities. This strategic bet on Nvidia underscores the widespread recognition of nvidia’s products’ performance, specifically in complex and demanding tasks.

Estimated Market Share of AI Chip Vendors (2024-2025) – Example Vendor estimated Market Share (%) Nvidia 75% Intel 10% AMD 5% Other 10% The future of the AI Chip market

Microsoft AI chip delays are not necessarily a permanent setback, however, they are impacting the current market dynamic. Expect to see more competition, innovation and price changes as the industry strives continually to refine the technology of the future.Furthermore, custom AI chips, like the efforts from Microsoft and other companies, will likely play a more significant role in the future. As the AI revolution continues, the demand for advanced AI hardware will only increase.

Key trends to Watch

- increased competition: as the AI chip market expands, more players will emerge.

- Hardware/Software integration: there will be a growing importance of synergy between software and specialized hardware to unlock the full potential of AI.

- Edge computing growth: The demand for efficient AI processing units at the edge will push the boundaries of AI hardware.

Microsoft is developing its own AI chips to reduce reliance on Nvidia, customize hardware for Azure cloud workloads, and potentially lower costs. These custom chips allow for greater control over performance and efficiency.

Developing AI chips involves steep technical and organizational hurdles. Unexpected design changes, staffing shortages, high turnover, and the need to meet aggressive performance targets all contribute to the challenges.

The delay of the Braga chip means Microsoft will continue to rely on Nvidia hardware for longer, potentially increasing costs and limiting its ability to differentiate its cloud services.It also widens the performance gap between Microsoft’s offerings and nvidia’s Blackwell chips.