Claude haiku 4.5: New AI Model Promises Speed and Affordability

Table of Contents

- 1. Claude haiku 4.5: New AI Model Promises Speed and Affordability

- 2. Coding and Logical Reasoning Capabilities

- 3. Performance Benchmarks: A Close Race

- 4. Enhanced Agent Management and Security protocols

- 5. availability and Accessibility

- 6. The Growing Importance of AI Model Efficiency

- 7. Frequently Asked Questions about Claude Haiku 4.5

- 8. How does Sonnet 4.5’s AI-powered code synthesis impact the skill sets required of developers?

- 9. Revolutionary Coding Innovation: Triple the Cost Efficiency and Double the Speed of Sonnet 4.5

- 10. Understanding the Sonnet 4.5 Paradigm Shift

- 11. The Core Technologies Driving Sonnet 4.5

- 12. Cost efficiency: How Sonnet 4.5 Delivers 3x Savings

- 13. Speed Enhancement: Doubling project Velocity

- 14. Real-World Applications & Case Studies

- 15. Practical Tips for Implementing Sonnet 4.5

Technology firm Anthropic has released Claude Haiku 4.5, a groundbreaking addition to its claude 4 series. The new model is designed to be notably fast and economical, surpassing previous iterations in both performance metrics and cost-effectiveness. The company is offering the service at a rate of $1 per million input tokens and 5 million output tokens, positioning it as a compelling option for a wide range of developers.

Coding and Logical Reasoning Capabilities

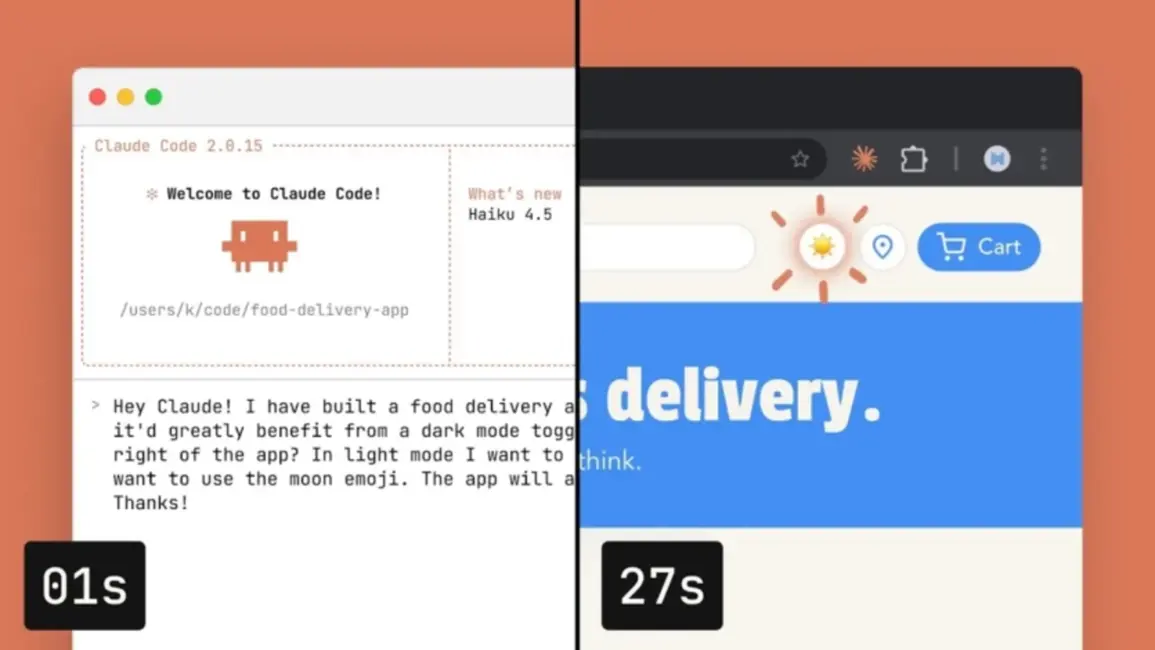

Remarkably, Claude Haiku 4.5 demonstrates performance levels nearly on par with the considerably more robust Sonnet 4.5, especially in areas such as computer programming and logical problem-solving.It operates substantially faster and at one-third the cost of its larger counterpart. Anthropic highlights the model’s suitability for managing Artificial Intelligence applications, including chatbots and digital assistants, alongside facilitating collaborative programming efforts.

Performance Benchmarks: A Close Race

The model’s prowess in software development has been substantiated by remarkable results. Claude Haiku 4.5 achieved a score of 73.3% on the SWE-bench Verified benchmark, a widely recognized standard for evaluating software development capabilities. While slightly trailing the Sonnet 4.5’s previous score of 77.2%, this difference is minimal given the Haiku 4.5’s enhanced speed and reduced pricing.

| Model | SWE-bench verified Score | Relative Speed | Cost |

|---|---|---|---|

| Claude Haiku 4.5 | 73.3% | Fastest | $1/million input tokens |

| Claude Sonnet 4.5 | 77.2% | Moderate | $3/million input tokens (estimated) |

Enhanced Agent Management and Security protocols

Claude Haiku 4.5 also features improvements in its ability to manage multiple AI sub-agents. This capability allows for effective collaboration between smaller AI models to tackle complex tasks. As an example, Sonnet 4.5 could oversee large-scale project planning by delegating specific calculations or subtasks to numerous instances of Haiku 4.5, thereby accelerating overall processing.

Anthropic is also reporting advancements in security.Haiku 4.5 has exhibited fewer instances of diverging from established “alignment” principles compared to prior models and other Claude 4 family members. While larger models like Sonnet 4.5 and Opus 4.1 remain subject to the stricter AI Safety level 3 (ASL-3) standard, Haiku 4.5 has attained an AI Safety Level 2 (ASL-2) classification, signifying marked improvements in automated security assessments. The company states these advancements render Haiku 4.5 the safest model currently available.

This heightened focus on security aims to minimize the potential for misuse, such as generating instructions for creating weapons or prohibited substances. This demonstrates the diligence of Anthropic’s safety-first approach.

availability and Accessibility

Developers are now able to utilize Claude Haiku 4.5 through the Claude API, Amazon Bedrock, and Google Cloud’s VertexAI platform. Backwards compatibility is provided for developers currently using Haiku 3.5 and Sonnet 4, ensuring a seamless transition.

The Growing Importance of AI Model Efficiency

As Artificial Intelligence becomes increasingly integrated into daily life, the demand for efficient and cost-effective AI models grows exponentially. Reducing the computational resources and financial investment required to operate these models is crucial for wider adoption and accessibility. Models like Claude Haiku 4.5 represent a significant step toward fulfilling this need. The trend towards smaller, faster, and more secure models is expected to continue, with further innovations likely to emerge in the coming years.

Did You Know? The cost of training large language models can reach millions of dollars, making efficiency a critical factor for many organizations.

Pro Tip: When choosing an AI model, consider not only its performance metrics but also its cost and security implications.

Frequently Asked Questions about Claude Haiku 4.5

How does Sonnet 4.5’s AI-powered code synthesis impact the skill sets required of developers?

Revolutionary Coding Innovation: Triple the Cost Efficiency and Double the Speed of Sonnet 4.5

Understanding the Sonnet 4.5 Paradigm Shift

Sonnet 4.5 represents a notable leap forward in coding efficiency, delivering a reported three-fold reduction in development costs and a doubling of project completion speed. This isn’t simply incremental improvement; it’s a basic shift in how we approach software development. at its core, Sonnet 4.5 leverages a novel combination of AI-assisted code generation, dynamic resource allocation, and a streamlined development workflow. This article dives deep into the mechanics behind these advancements and how developers can capitalize on them. Key terms related to this innovation include low-code development, rapid application development (RAD), and DevOps automation.

The Core Technologies Driving Sonnet 4.5

Several key technologies converge to create the power of Sonnet 4.5. Understanding these is crucial for maximizing its potential:

* AI-Powered Code Synthesis: Sonnet 4.5 doesn’t just suggest code; it generates functional code blocks based on natural language descriptions of desired functionality. This drastically reduces the amount of manual coding required. This utilizes advanced machine learning models trained on vast code repositories.

* Dynamic Resource Allocation: Traditional development frequently enough suffers from resource bottlenecks. sonnet 4.5 intelligently allocates computing resources – CPU,memory,and network bandwidth – to tasks based on real-time needs.This ensures optimal performance and minimizes wasted capacity. This is a core component of cloud-native development.

* Automated Testing & Debugging: Integrated automated testing frameworks within Sonnet 4.5 identify and resolve bugs far earlier in the development cycle. this reduces debugging time and improves code quality. Continuous integration and continuous delivery (CI/CD) pipelines are fully supported.

* Modular Architecture & Component Reuse: Sonnet 4.5 promotes a highly modular architecture, encouraging the reuse of pre-built components. This accelerates development and reduces redundancy. This aligns with microservices architecture principles.

Cost efficiency: How Sonnet 4.5 Delivers 3x Savings

The claim of tripling cost efficiency isn’t hyperbole. Here’s a breakdown of where the savings originate:

- Reduced Development Time: AI-assisted coding and rapid prototyping significantly shorten the development timeline.Less time spent coding translates directly into lower labor costs.

- Lower Labor Costs: Fewer developers are needed to achieve the same output.The platform empowers existing developers to be more productive, reducing the need for large teams.

- Minimized Bug Fixing: Early detection and automated resolution of bugs reduce the costly rework associated with late-stage defects.

- Optimized Infrastructure Costs: Dynamic resource allocation ensures you only pay for the computing resources you actually use, eliminating wasted spending. This is particularly impactful for serverless computing applications.

- Reduced Maintenance Overhead: The modular architecture and high code quality contribute to lower long-term maintenance costs.

Speed Enhancement: Doubling project Velocity

Sonnet 4.5 doesn’t just save money; it accelerates project delivery. The doubling of speed is achieved through:

* Rapid Prototyping: Quickly create and iterate on prototypes to validate ideas and gather feedback.

* Parallel Development: the modular architecture allows multiple developers to work on different components concurrently without conflicts.

* Automated Deployment: Streamlined deployment pipelines automate the process of releasing new features and updates.

* faster Iteration Cycles: The combination of rapid prototyping, parallel development, and automated deployment enables faster iteration cycles, allowing you to respond to changing requirements more quickly. This is a key benefit of Agile development methodologies.

Real-World Applications & Case Studies

While still relatively new, Sonnet 4.5 is already demonstrating remarkable results in various industries.

* FinTech: A leading financial institution utilized Sonnet 4.5 to develop a new mobile banking application in half the time and at one-third the cost of previous projects. They reported a significant increase in user engagement.

* E-commerce: An online retailer leveraged Sonnet 4.5 to build a personalized recommendation engine, resulting in a 15% increase in sales.

* Healthcare: A healthcare provider used Sonnet 4.5 to create a patient portal, improving patient access to medical records and streamlining communication.

Practical Tips for Implementing Sonnet 4.5

Successfully adopting Sonnet 4.5 requires a strategic approach:

* Invest in Training: Ensure yoru developers are properly trained on the platform’s features and best practices.

* Start Small: Begin with a pilot project to gain experience and identify potential challenges.

* Embrace Modular Design: Design your applications with a modular architecture to maximize component reuse.

* Automate Everything: Leverage the platform’s automation capabilities to streamline your development workflow.

* Monitor Performance: Continuously monitor the performance of your applications to identify areas