Bianca González’s Four-Year Fight: A Mother’s Plea for Reconstructive Surgery After Devastating Accident

This is a developing story. archyde.com is following the heartbreaking case of Bianca González, a woman whose life was irrevocably altered by a traffic accident in 2021. What began as a medical emergency has spiraled into a four-year ordeal, marked by multiple surgeries, a severe brain injury, and the loss of a portion of her skull. Her mother’s desperate appeal for reconstructive surgery highlights the long road to recovery and the challenges faced by trauma survivors. This breaking news story underscores the importance of patient advocacy and the often-overlooked long-term consequences of traumatic injuries.

The Accident and Initial Trauma

In 2021, Bianca González was involved in a traffic accident that necessitated immediate head surgery. While the initial intervention aimed to stabilize her condition, it proved to be just the first step in a complex and agonizing medical journey. Subsequent interventions, unfortunately, led to a devastating brain injury, forcing doctors to remove a section of her skull. The details surrounding the accident itself remain limited at this time, but the focus is now squarely on securing the care Bianca desperately needs.

“Four Years I Haven’t Stopped Suffering” – A Mother’s Heartbreak

Bianca’s mother’s poignant words – “Four years I haven’t stopped suffering” – encapsulate the immense emotional and financial toll this tragedy has taken on the family. The lack of a skull section leaves Bianca vulnerable to further complications and significantly impacts her quality of life. Reconstructive surgery offers a glimmer of hope, but accessing this vital treatment remains a significant hurdle. This case raises critical questions about the availability of specialized medical care and the support systems available to long-term trauma patients.

The Complexities of Brain Injury and Reconstructive Surgery

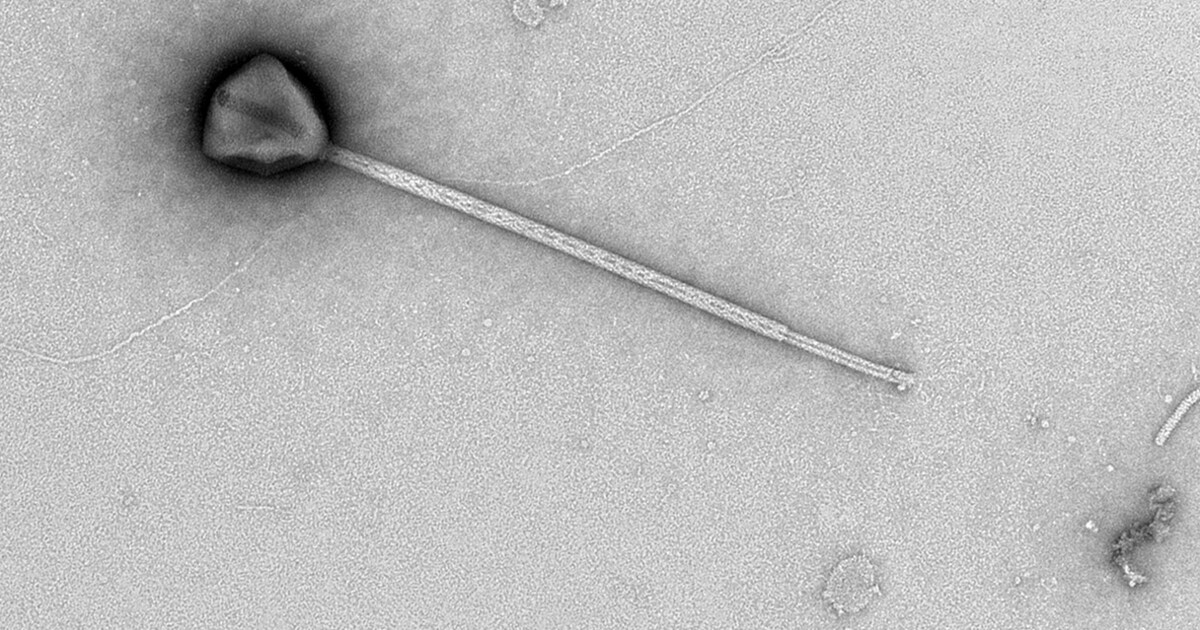

Brain injuries are notoriously complex, and recovery can be a lifelong process. The removal of a portion of the skull, known as a craniectomy, is sometimes necessary to relieve pressure on the brain after a severe injury. However, it often requires a subsequent cranioplasty – the reconstructive surgery to replace the missing bone. These procedures are highly specialized and can be expensive. According to the Brain Injury Association of America, approximately 2.87 million Americans sustain a traumatic brain injury each year. The long-term costs, both human and financial, are staggering.

[Image Placeholder: A respectful and appropriate image of Bianca González, if available, or a symbolic image representing brain injury recovery.]

A Parallel Story: The Right to Choose – Euthanasia Debate

This story arrives alongside another deeply personal and ethically challenging case: an individual suffering from a debilitating disease that destroys bones and joints, who has publicly requested euthanasia, stating, “This is not life.” While distinct, these two narratives converge on the fundamental question of quality of life and the right to self-determination in the face of overwhelming suffering. The debate surrounding euthanasia remains fiercely contested globally, with varying legal frameworks and deeply held moral beliefs. This parallel story serves as a stark reminder of the spectrum of challenges individuals face when confronted with severe medical conditions.

Navigating Medical Trauma: Resources and Support

For individuals and families navigating the aftermath of medical trauma, several resources are available. The Brain Injury Association of America (https://www.biausa.org/) provides information, support, and advocacy. Organizations like the National Center for Victims of Crime (https://victimconnect.org/) offer assistance to those impacted by violent incidents. Understanding your rights as a patient and seeking legal counsel when appropriate are also crucial steps in navigating the complexities of the medical system. Effective SEO strategies are vital for raising awareness about these critical issues and connecting those in need with the resources they deserve. This is a Google News worthy story.

The story of Bianca González is a powerful testament to the resilience of the human spirit and the unwavering love of a mother. As her family continues to fight for access to reconstructive surgery, archyde.com will continue to provide updates on this developing situation, offering a platform for awareness and advocacy. The intersection of medical advancements, patient rights, and the ethical considerations surrounding end-of-life choices will undoubtedly remain at the forefront of public discourse, demanding continued attention and compassionate understanding.