Petro Accuses EL TIEMPO of False reporting in Police Pressure Scandal

Table of Contents

- 1. Petro Accuses EL TIEMPO of False reporting in Police Pressure Scandal

- 2. what are the specific allegations made in the audio recordings regarding the 2022 presidential election?

- 3. Gustavo Petro’s Inquiry into Pressured Colonial Audio and EL TIEMPO’s Revelations

- 4. The Core of the Controversy: Allegations of Illicit Funding

- 5. EL TIEMPO’s Reporting: A Timeline of Events

- 6. The Colonial Connection: Understanding the Land Claims

- 7. Key Figures Involved

- 8. Legal Ramifications and Potential Outcomes

- 9. The role of Media and Public opinion

- 10. Impact on Investor Confidence and International Relations

- 11. Analyzing the Audio Evidence: Forensic Examination

- 12. The Broader Context: Colombia’s History of Political Scandals

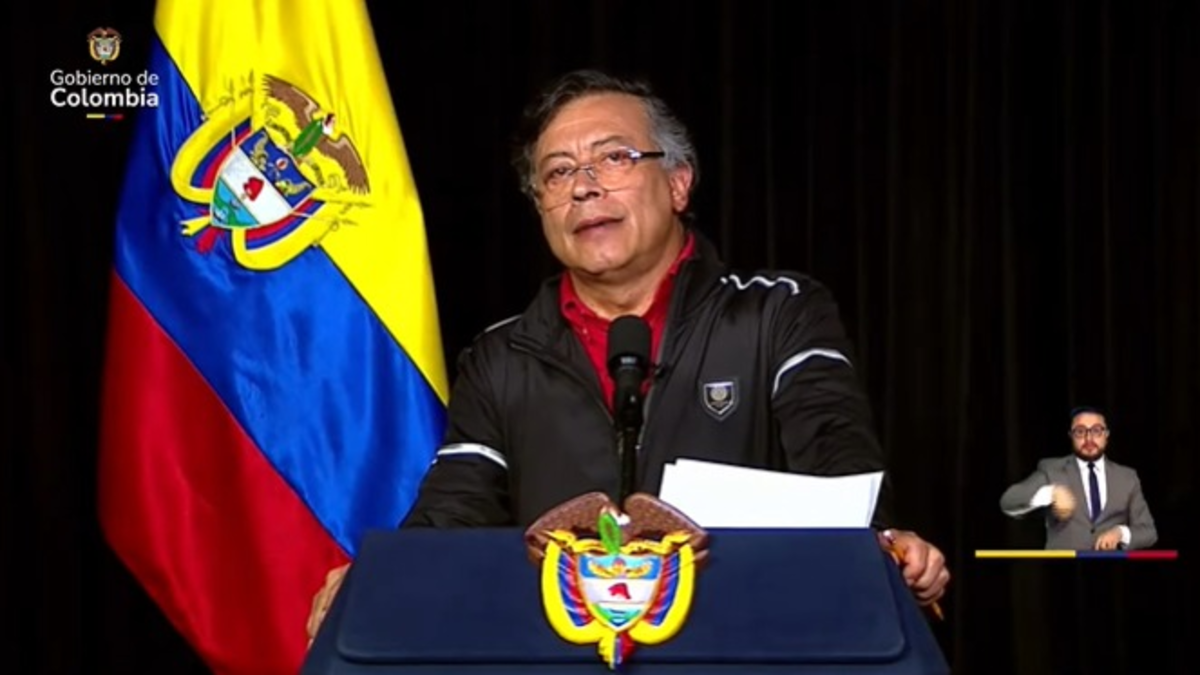

Bogotá, Colombia – November 29, 2025 – President Gustavo Petro publicly challenged reporting by Colombian newspaper EL TIEMPO regarding an audio recording alleging pressure on police colonels to provide information in exchange for career advancement. In a speech delivered Friday, November 28th, Petro claimed the newspaper misidentified a key individual within the recording, falsely attributing statements to a man named Wilmar who, according to the President, is not the person featured in the conversation.

The controversy stems from an audio recording published by EL TIEMPO on November 25th, purportedly revealing attempts to influence police officials.The recording allegedly features conversations between colonels discussing providing information about their superiors. Petro acknowledged the existence of the recordings and stated he relies on a variety of sources – including judicial records, the Attorney General’s Office, the Special Jurisdiction for Peace (JEP), disciplinary investigations, and intelligence reports – to assess personnel within the public force.

“In the topic of EL TIEMPO we find that some recordings are shown. It is said that there it would be confirmed how police officers tell a man named Wilmar to practically help him keep his job,” Petro stated. However, he continued, “I found it interesting in the EL TIEMPO article to discover the lie…because in the conversation…he is not Mr. Wilmar.” The President has demanded a retraction from EL TIEMPO, which has yet to be issued.

EL TIEMPO‘s reporting indicates that Defense Minister Pedro Sánchez confirmed receiving complaints over two months ago regarding pressure being exerted on colonels. Minister Sánchez stated he instantly activated protocols to investigate both the allegations of pressure and claims that they were unfounded.

Further complicating the situation, EL TIEMPO reported that Minister Sánchez reinstated Colonel Flaminio Quitián – a figure mentioned by President Petro – after learning of his accomplished operational history against the Gulf Clan criminal organization. The President’s challenge to the newspaper’s accuracy adds another layer to the unfolding situation, raising questions about the integrity of the reporting and the potential for political motivations.

The case remains under investigation, with potential implications for the Colombian national Police and the ongoing efforts to combat corruption within the country’s security forces.

what are the specific allegations made in the audio recordings regarding the 2022 presidential election?

Gustavo Petro’s Inquiry into Pressured Colonial Audio and EL TIEMPO’s Revelations

The Core of the Controversy: Allegations of Illicit Funding

The recent inquiry launched by Colombian president gustavo Petro centers around audio recordings suggesting pressure exerted on a former colonial-era official, potentially linked to illicit campaign financing. thes recordings, initially brought to light by EL TIEMPO, a leading Colombian newspaper, have ignited a political firestorm and prompted a formal examination into potential corruption and electoral interference. The central claim revolves around alleged attempts to influence the outcome of the 2022 presidential election. Key terms driving search interest include “Petro investigation,” “Colombia election fraud,” and “EL TIEMPO revelations.”

EL TIEMPO’s Reporting: A Timeline of Events

EL TIEMPO’s reporting has been pivotal in unfolding the narrative. here’s a breakdown of key events as reported by the newspaper:

- Initial Leak (October 2024): The first audio recordings surfaced,allegedly featuring conversations between a businessman,a former official linked to the colonial era,and individuals connected to the Petro campaign.

- Content of the Recordings: The audio purportedly details requests for notable financial contributions to the campaign in exchange for favorable treatment regarding colonial-era land claims.

- Petro’s Response: President Petro initially dismissed the allegations as a smear campaign orchestrated by his political opponents. However, mounting pressure led to the proclamation of an internal inquiry.

- Formal Investigation (November 2025): The Attorney general’s Office officially launched a formal investigation into the allegations,subpoenaing individuals mentioned in the recordings.

- Further Revelations: EL TIEMPO continued to publish additional recordings and investigative reports, adding layers of complexity to the case. Related searches include “Colombia political scandal” and “Petro campaign finance.”

The Colonial Connection: Understanding the Land Claims

The controversy is deeply rooted in past land claims stemming from Colombia’s colonial past. Many indigenous and afro-Colombian communities have long sought the restitution of lands historically taken from them. The audio recordings suggest that campaign contributions were linked to promises of expedited or favorable rulings on these land claims. This aspect of the case has drawn attention from human rights organizations and land rights advocates. Keywords like “land restitution Colombia,” “Afro-Colombian land rights,” and “indigenous land claims” are gaining traction.

Key Figures Involved

* Gustavo Petro: The current President of Colombia,whose campaign is at the center of the allegations.

* The Businessman (Name withheld pending investigation): Allegedly the primary source of the illicit funding.

* Former Colonial-Era Official (Name withheld pending investigation): The individual allegedly pressured to facilitate the financial contributions.

* EL TIEMPO Investigative Team: The journalists responsible for uncovering and reporting on the story.

* The Attorney General: Leading the official investigation into the allegations.

Legal Ramifications and Potential Outcomes

The investigation could have significant legal ramifications. Potential charges include:

* Illegal Campaign Financing: Violations of Colombian electoral law regarding campaign contributions.

* Corruption: Bribery and abuse of power.

* Influence Peddling: Using political influence for personal gain.

* Obstruction of Justice: Any attempts to hinder the investigation.

The potential outcomes range from exoneration to impeachment proceedings against President Petro.The investigation’s progress will be closely monitored by both domestic and international observers. Search terms like “Colombia impeachment,” “Petro legal challenges,” and “Colombian electoral law” are relevant here.

The role of Media and Public opinion

EL TIEMPO’s reporting has played a crucial role in shaping public opinion. The newspaper’s extensive coverage has kept the issue in the public eye and fueled calls for transparency and accountability. However, the media landscape in Colombia is polarized, and other news outlets have presented differing perspectives on the allegations. This has led to a highly charged political environment. Related keywords include “Colombian media bias,” “Petro approval rating,” and “Colombia political polarization.”

Impact on Investor Confidence and International Relations

The scandal has raised concerns about investor confidence in Colombia. Political instability and allegations of corruption can deter foreign investment and hinder economic growth. Furthermore, the controversy could strain Colombia’s relations with international partners, notably those who prioritize transparency and good governance. Searches like “Colombia investment climate,” “foreign investment Colombia,” and “Colombia international relations” are increasing.

Analyzing the Audio Evidence: Forensic Examination

Forensic analysis of the audio recordings is a critical component of the investigation. Experts are examining the recordings to verify their authenticity, identify the speakers, and determine whether they have been edited or manipulated. The results of this analysis will be crucial in determining the credibility of the evidence. Keywords include “audio forensics Colombia,” “digital evidence analysis,” and “audio authentication.”

The Broader Context: Colombia’s History of Political Scandals

Colombia has a history of political scandals and corruption. This latest controversy is not an isolated incident but rather part of a broader pattern of political malfeasance. understanding this historical context is essential for interpreting the current situation. Related searches include “Colombia corruption index,” “Colombian political history,” and “past