The Future of Automation: Four Key AI trends Reshaping Robotics in 2026 and Beyond

Table of Contents

- 1. The Future of Automation: Four Key AI trends Reshaping Robotics in 2026 and Beyond

- 2. Predictive Mathematics: the Rise of Anticipatory Robotics

- 3. Cooperative Robotics: Robots Working in Harmony

- 4. AI-Powered Perception: Seeing and Understanding the World

- 5. reinforcement Learning: Robots Learning Through Experience

- 6. A Comparative Look at AI Integration in Robotics

- 7. How will foundation models impact robotic development timelines?

- 8. Universal Robots’ AI Leader Unveils Four Key Physical AI Trends Shaping Robotics in 2026 and beyond

- 9. 1. Embodied AI: The Rise of Robots That Truly Understand Their Habitat

- 10. 2. Generative AI for Robotics: From Simulation to Real-World Deployment

- 11. 3. Foundation models for Robotics: The Power of Pre-trained Intelligence

- 12. 4. Neuro-Symbolic AI: Combining the Best of Both Worlds

- 13. The Future of Physical AI

The landscape of robotics is undergoing a rapid transformation, driven by advancements in Artificial Intelligence. Experts predict significant shifts in how robots perceive, interact with, and operate within the physical world by 2026 and in the years following. Understanding these emerging trends is crucial for businesses and individuals alike, as automation continues to permeate nearly every facet of modern life.

Predictive Mathematics: the Rise of Anticipatory Robotics

One of the most significant developments is the increasing integration of predictive mathematics into robotic systems. This allows robots to anticipate future states and events, optimizing their actions in real-time. Instead of simply reacting to stimuli, these robots can proactively adjust their behavior, making them more efficient and adaptable. This technology, drawing from fields like machine learning and statistical modeling, is already being implemented in logistics and manufacturing.

Cooperative Robotics: Robots Working in Harmony

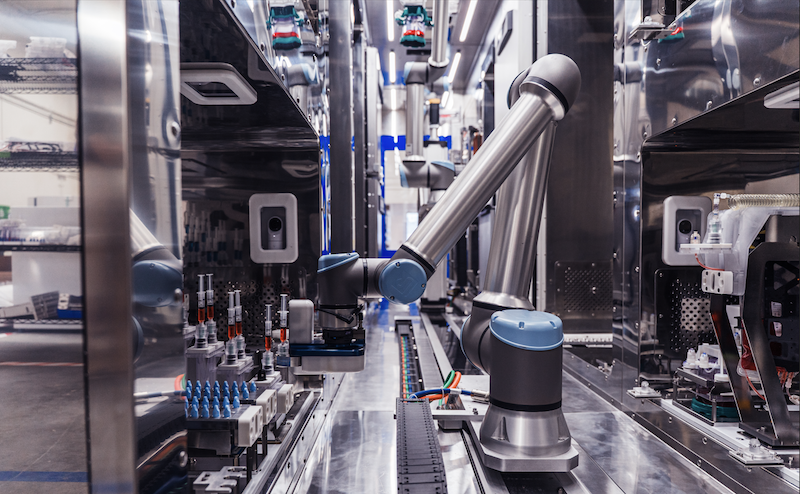

The concept of cooperative robots, often referred to as “cobots,” is gaining momentum.These robots are specifically designed to work alongside humans in shared workspaces, enhancing productivity and safety. Unlike customary industrial robots, cobots often lack extensive safety guarding, relying instead on advanced sensors and algorithms to ensure safe human-robot interaction. A recent report by the International Federation of Robotics shows a 13% increase in cobot sales globally in 2023, indicating growing demand.

AI-Powered Perception: Seeing and Understanding the World

Advancements in computer vision and sensor technology are allowing robots to “see” and understand their habitat with unprecedented accuracy. This includes not only object recognition but also the ability to interpret complex scenes,detect anomalies,and navigate dynamic environments. This enhanced perception is crucial for applications such as autonomous vehicles, robotic surgery, and quality control in manufacturing. Companies like NVIDIA are investing heavily in developing AI platforms specifically tailored for robotic perception.

reinforcement Learning: Robots Learning Through Experience

Reinforcement learning (RL) is enabling robots to learn complex tasks through trial and error, without explicit programming. By rewarding desired behaviors and penalizing undesired ones, RL algorithms allow robots to adapt to new situations and improve their performance over time. This is especially valuable in unstructured environments where it is arduous to anticipate all possible scenarios. A prime example is Boston Dynamics’ robots,which continually refine their movements through RL.

A Comparative Look at AI Integration in Robotics

| Trend | Description | Key application |

|---|---|---|

| predictive Mathematics | Robots anticipate future states for optimized actions. | supply chain Management |

| Cooperative Robotics | Robots collaborate safely with humans in shared spaces. | Automotive Assembly |

| AI-Powered Perception | Robots “see” and understand their surroundings accurately. | Autonomous Navigation |

| Reinforcement Learning | Robots learn through trial and error. | Complex Manipulation Tasks |

The convergence of these four trends promises a future where robots are more clever, adaptable, and integrated into our daily lives. These advancements will have profound implications for industries ranging from manufacturing and logistics to healthcare and education.

As robotics continues to evolve, ethical considerations and workforce adaptation will become increasingly vital. Preparing for a future shaped by automation requires proactive investment in education, training, and responsible AI development.

What impact do you think these trends will have on the job market? How can we ensure a smooth transition into a more automated future?

Share your thoughts in the comments below.

How will foundation models impact robotic development timelines?

Universal Robots’ AI Leader Unveils Four Key Physical AI Trends Shaping Robotics in 2026 and beyond

The landscape of robotics is undergoing a rapid transformation, driven by advancements in Artificial Intelligence. At a recent industry summit, Universal Robots’ Chief AI Officer, Dr. Anya Sharma, outlined four pivotal trends in Physical AI poised to redefine the capabilities and applications of robots in the coming years. These aren’t just incremental improvements; they represent fundamental shifts in how robots perceive, learn, and interact with the physical world.

1. Embodied AI: The Rise of Robots That Truly Understand Their Habitat

For years, robotics has largely focused on programming robots to execute tasks. Embodied AI flips this script.It’s about equipping robots with the ability to develop a deep understanding of their surroundings – not just recognizing objects, but comprehending their properties, relationships, and potential interactions.

This goes beyond simple computer vision. Embodied AI leverages multi-modal sensors – combining vision,tactile sensing,force/torque sensors,and even audio – to create a holistic perception of the environment. Think of a robot assembling electronics. Previously, it needed precise instructions for each component. With Embodied AI, it can infer how parts fit together, adapt to slight variations, and even identify potential assembly errors.

Benefits of Embodied AI:

* Increased Adaptability: Robots can handle unstructured environments and unexpected situations.

* Reduced Programming Effort: Less need for explicit programming, as robots learn through interaction.

* Enhanced Safety: Better understanding of surroundings leads to safer human-robot collaboration.

2. Generative AI for Robotics: From Simulation to Real-World Deployment

Generative AI, popularized by tools like ChatGPT, is now making meaningful inroads into robotics. Dr. Sharma highlighted its potential to bridge the “sim-to-real” gap – the notorious challenge of transferring skills learned in simulated environments to the complexities of the real world.

Traditionally, creating realistic simulations for robot training has been incredibly time-consuming and computationally expensive. Generative AI can now automatically generate diverse and realistic simulation scenarios, exposing robots to a wider range of conditions and accelerating the learning process.

Furthermore, generative models are being used to design robot grippers and end-effectors optimized for specific tasks, reducing the need for costly physical prototyping. This is particularly impactful for applications requiring delicate handling or specialized tools.

practical Tips for Implementation:

* Focus on Data Diversity: The quality of generative AI models depends on the diversity of the training data.

* Utilize Domain Randomization: Introduce variations in simulation parameters (lighting, textures, object positions) to improve robustness.

* Combine with Reinforcement Learning: Use generative AI to create challenging scenarios for reinforcement learning algorithms.

3. Foundation models for Robotics: The Power of Pre-trained Intelligence

Just as large language models (LLMs) have revolutionized natural language processing, foundation models are emerging as a game-changer in robotics. These are massive AI models pre-trained on vast datasets of robotic data – encompassing images, sensor readings, and robot actions.

The key advantage? These models can be quickly adapted to new tasks with minimal fine-tuning. Instead of training a robot from scratch for each new application, developers can leverage the pre-trained intelligence of a foundation model, significantly reducing development time and cost.

Universal Robots is actively contributing to the development of open-source foundation models for robotics, fostering collaboration and accelerating innovation across the industry. This collaborative approach is crucial for democratizing access to advanced robotic capabilities.

Real-World Example:

BMW Group has been utilizing foundation models to accelerate the development of robotic solutions for its manufacturing facilities. By leveraging pre-trained models, they’ve reduced the time required to deploy new robotic applications by up to 50%.

4. Neuro-Symbolic AI: Combining the Best of Both Worlds

Conventional AI approaches frequently enough fall into two camps: neural networks (excellent at pattern recognition but lacking in reasoning ability) and symbolic AI (strong in logic and reasoning but brittle when dealing with noisy real-world data). Neuro-symbolic AI aims to combine the strengths of both.

This involves integrating neural networks with symbolic reasoning systems, allowing robots to not only perceive their environment but also understand it at a higher level of abstraction. Such as, a robot tasked with cleaning a room could use neural networks to identify objects and symbolic reasoning to plan a cleaning strategy based on the room’s layout and the objects’ properties.

Case study: Advanced Logistics Automation

Several logistics companies are now implementing neuro-symbolic AI to optimize warehouse operations. Robots can now dynamically adjust picking routes based on real-time inventory levels, order priorities, and potential obstacles, leading to significant improvements in efficiency and throughput. This is a move beyond simple automated guided vehicles (AGVs) towards truly clever robotic logistics solutions.

The Future of Physical AI

Dr. Sharma emphasized that these four trends are not isolated developments. They are converging to create a new era of robotics – one where robots are more adaptable, intelligent, and capable of solving complex real-world problems. The focus is shifting from automating tasks to augmenting human capabilities, creating a future where humans and robots work seamlessly together.